A managed VPS hosting provider must be able to adapt to every user’s demands. A multi-national online business generating thousands in revenue every second should be able to get the same high-speed, reliable service as a blogger starting a hobby project.

In the case of a large global enterprise – a single VPS won’t do the trick. Websites with a lot of traffic and mission-critical apps often use clusters of virtual servers that work together to provide excellent uptime stats and quick loading speeds.

This wouldn’t be possible without the load balancer – the component responsible for redirecting requests to individual virtual instances. It must be built with redundancy, security, and performance in mind, and it should be configured to serve data efficiently, regardless of the load.

Today, we’ll discuss some of the strategies for doing this.

Introduction to Load Balancing In Managed VPS Hosting

We all know how flexible and easily scalable a virtual private server is. You can add more resources with the click of a mouse, and if it’s deployed on a proper cloud infrastructure – there’s virtually no limit on how much you can upgrade it. However, for all its advantages, a single-VPS setup is not always suitable for every project.

More specifically, it doesn’t quite deliver in two aspects that could be critical in some cases:

High availability

It’s an industry term used to describe services displaying more than 99.999% uptime. A typical virtual server can’t guarantee this sort of stats.

The problem is that if a VPS crashes, everything hosted on it goes offline. Modern cloud infrastructure allows hosts to quickly redeploy the server, but that takes at least a few minutes – fine for most people but not ideal if you’re running a mission-critical operation like mobile banking or traffic control, for example.

Blistering global performance

The more popular your website is, the more difficult it is to maintain excellent performance at all times. The additional load from a traffic spike is bound to slow the server down, and if you don’t have other instances to rely on – the drop can be noticeable.

Furthermore, if you have visitors from across the globe, some of them will need to wait for the data to travel the thousands of miles between the virtual server and their screen.

A cluster of managed cloud VPS servers is one of the few solutions that resolves these shortcomings. Two or more virtual machines (or nodes) are deployed and connected in a network. They all contain your project’s data and can serve incoming requests.

In addition to the hardware resources provided by the multiple servers, you get redundancy – if one of the instances fails, others are on hand to take up the slack and keep your project online. You can also deploy your servers in multiple locations worldwide and reduce latency.

There are two main approaches to building a VPS cluster:

- An Active-Standby topology – One primary node handles all the incoming requests, with the rest on standby. If there’s a fault with the primary server – it goes offline while one of the backup nodes kicks in to cover for it. If there’s an issue with the second one, the third one is brought up, etc.

- An Active-Active topology – Every node in the cluster processes requests at the same time.

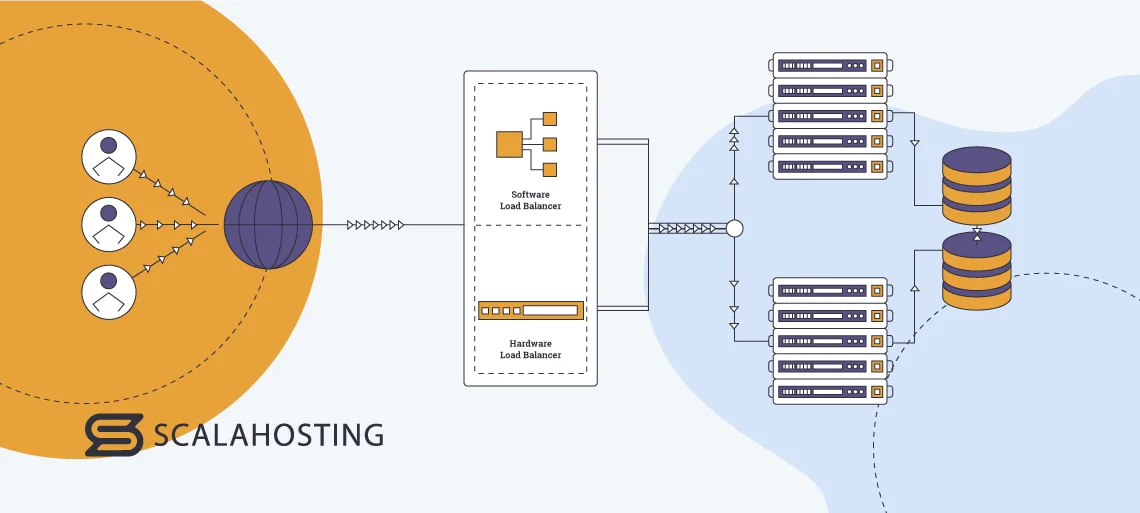

In both cases, there’s a load balancer between the internet and the network of virtual servers. Its job is to redirect every request to the most appropriate server in the cluster.

If you use an Active-Standby topology, the load balancer sends requests to the primary server. The other nodes are utilized only if there’s an issue with the primary one. The switch is seamless and known as a failover. Its purpose is to ensure your website availability isn’t affected by any hardware issues.

In an Active-Active cluster, the load balancer uses one of several algorithms to pick which node will process each incoming request. Several servers work simultaneously to distribute the traffic evenly, ensuring flawless performance. If one of the nodes goes offline, the traffic is immediately redirected to the rest of the machines, and users don’t see any errors.

You can also deploy your VPS solutions in multiple locations across the globe and configure the load balancer to send every request to the server nearest to the client. This ensures loading speeds are the same for everyone, regardless of their location.

But this is a very rough description of what a load balancer does. Let’s delve a bit deeper into the details.

Types of Load Balancers

The load balancer is critical in ensuring your managed VPS cluster delivers excellent performance and availability. But for everything to work correctly, you need to make sure you’ve picked and configured the right solution.

The two main types of load balancers are:

- A hardware load balancer is a physical device connected to your network. It has its own operating system and software designed to redirect traffic according to the set algorithm.

- A software load balancer (also called a virtual load balancer) is a software package usually deployed on a virtual machine and dispatching requests to the different cluster nodes. Unlike a hardware load balancer, you don’t need a separate device or a specific operating system.

Pros & Cons

The virtual load balancer edges ahead in several key aspects.

First, you have the question of price. Hardware load balancers are not cheap, and neither is deploying them in the data center. Speaking of data centers, a hardware balancer is ideally situated in the same location as your managed VPS cluster. If you have nodes in multiple data centers, you need a load balancer in each of them, plus a central box redirecting the traffic to the different locations.

Furthermore, the whole point of using a managed VPS cluster instead of a lone server is to eliminate the single point of failure. If you have just one hardware load balancer handling all incoming requests, the entire network depends on its health.

A good VPS cluster has at least two load balancers in each location working in an Active-Standby configuration to ensure redundancy. In other words, the costs could quickly spiral out of control with a hardware load balancer.

By contrast, a virtual load balancer can be deployed on an affordable VPS solution. Coordinating several software load balancers at once is more straightforward and cheaper, especially if you have a multi-region cluster. Not to mention, they’re easier to scale – both vertically and horizontally.

Load balancers can also be categorized according to their place on the so-called OSI model. OSI stands for Open Systems Interconnection, and the model has been used since the 1980s to visualize the communication between the participants in a computer network.

According to OSI, there are seven layers:

- Physical layer

- Data link layer

- Network layer

- Transport layer

- Session layer

- Presentation layer

- Application layer

Load balancers can be classified as Layer 4 or Layer 7 load balancers. Here’s what you need to know:

- Layer 4 load balancing

A layer 4 load balancer resides in the transport layer. When it receives an incoming request, the only information about it is the sender’s IP address and the networking port. Usually, the data inside the request itself is encrypted, and at the transport layer, the balancer has no way of decrypting it. With no further information, it simply uses the software algorithm it’s been configured to work with to redirect the request to one of the cluster nodes.

- Layer 7 load balancing

Layer 7 is the application layer; at this level, the load balancer can decrypt the data inside the request. Based on the gathered information, it can make a decision to redirect the traffic to a specific server. A layer 7 load balancer can theoretically modify the header and the data.

Pros & Cons

Layer 4 load balancing is simple and quick. The balancer doesn’t need to read, look up, or compare the data, so rerouting it takes less time and resources.

Load balancing at the transport layer also relies on a single TCP connection between the destination and the source, so the overall load on the balancer is lower. And because no data is decrypted at this point, the balancer isn’t a target for the hackers.

The decryption of data at the application level makes layer 7 load balancers a possible point of entry, but attacks on properly configured clusters are very rare and tricky to pull off. At the same time, the balancer can take into account the nature of the data when rerouting it to a particular node, meaning a more flexible setup and more options for directing the right traffic to the right web server. Caching is also possible with layer 7 load balancing, so you have another opportunity to extract more performance from your website or application.

The downside is that layer 7 load balancing is trickier to set up, and because it relies on multiple TCP connections – you’re likely to reach the balancer’s capacity more quickly.

The differences in how layer 4 and layer 7 load balancers handle requests also mean that they don’t support the same range of load-balancing algorithms.

Load Balancing Algorithms

A load-balancing algorithm comprises a set of rules that the load balancer sticks to when redirecting traffic to specific nodes. The implementation differs from cluster to cluster, and algorithms are usually customized to the client’s requirements. However, they can be grouped together based on the principles they adopt. Here are the most common types.

Round-robin load balancing

The load balancer forms a queue of servers and delivers each subsequent request to the next node in the line. When it gets to the end, the balancer goes back to the start.

For example, let’s assume you have a cluster of ten servers and 11 site visitors coming in one after the other, with each sending a single request. Visitor 1 will be sent to server 1, visitor 2 to server 2, etc., and when the load balancer gets to visitor 11, it will redirect them to server 1.

It’s a simple approach that works particularly well on a cluster of servers with identical hardware configurations. The algorithm keeps the load level similar at all nodes and ensures performance is consistent for everyone.

Weighted round-robin

The weighted round robin is a modification of the round robin algorithm particularly suitable for clusters where the nodes aren’t identically powerful. For example, you have four servers with 2 CPU cores and 2GB of RAM and one with 4 cores and 4GB of memory.

The weighted round-robin algorithm can ensure the more powerful node processes twice as many requests as the others. Once again, the idea is to keep the load even at all servers while also efficiently utilizing the hardware of your more powerful machines.

Least connection

In addition to rerouting traffic, the load balancer monitors how busy your servers are at any given point. It knows how many connections each node is processing, so an algorithm can use this information to redirect requests to the least busy node. Because it allows you to spread the load evenly, you stand a better chance of delivering the blistering loading speeds users expect.

Weighted least connection

Imagine you have five web servers and thirty incoming connections. If you use the standard least connection algorithm, the load balancer will redirect six connections to each server.

If your nodes are identical, this will result in an evenly spread load and predictable performance. However, some of the managed VPS servers in your cluster may be more powerful than others.

If you don’t take this into account, you may overload some servers while simultaneously underutilizing your more powerful machines. With a weighted least connection algorithm, you can configure the load balancer to reroute more traffic to the powerful nodes and keep the smaller ones healthy.

Least response time and weighted least response time

The least response time algorithm is an evolution of the least connection method. When it needs to redirect a new request, and the load balancer sees two or more nodes with the same number of active connections, it also checks their average response times. A short response time suggests that the server isn’t dealing with resource-intensive operations, hence, the pending request is assigned to it.

The weighted least response time variation also takes into consideration the hardware configuration of individual servers.

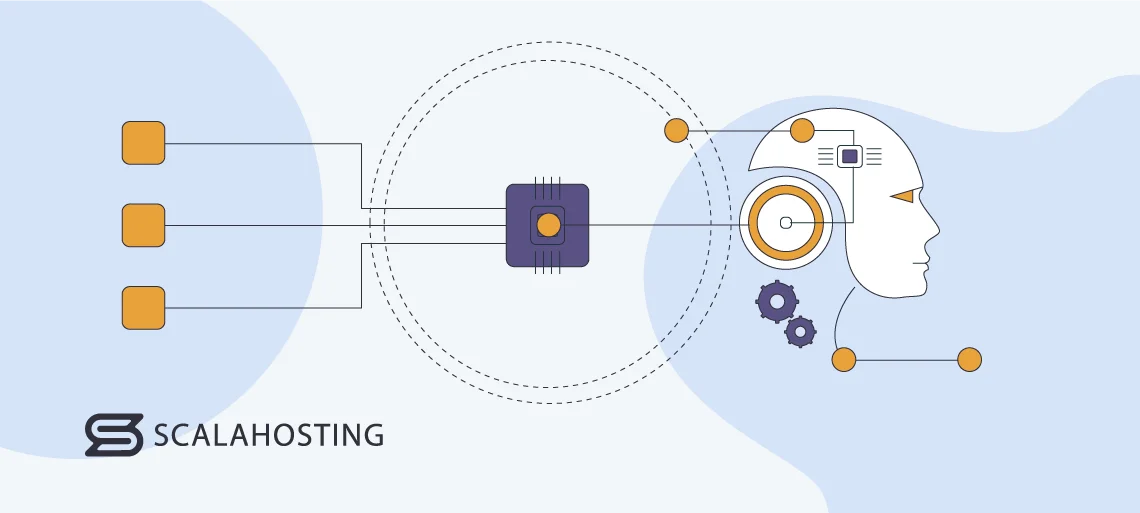

Resource-based

Resource-based load balancing redirects each incoming request to the cluster node with the lowest overall load at any given time. It relies on a special software agent installed on every server, which keeps an eye on the node’s hardware resources and monitors how much of the power is available. Individual server loads change all the time, and the balancer makes constant adjustments to guarantee the best possible performance.

IP hash or URL hash

An IP hash load balancer takes the request’s source IP and passes it through a cryptographic function to create a unique hash key before routing the request to one of the servers in the cluster. Whenever the load balancer gets a request from that IP, it calculates the same hash key and assigns the client to the same server. It’s a good choice if you want to make sure specific IP addresses are served by specific nodes.

The URL hash algorithm is a variation of the IP hash. The difference is that the load balancer hashes the requested URL instead of the source IP. This algorithm can ensure different nodes serve different content.

The algorithms above can be divided into two groups. The resource-based least connection and least response time methods are used in what’s known as dynamic load balancing – the load balancer takes into account the current situation of your servers before deciding which node is best suited to process the pending request.

The round robin and the IP/URL hash algorithms represent static load balancing in which traffic is redirected according to rules that remain the same regardless of the cluster’s current condition.

Both dynamic and static algorithms have pros and cons, and which approach you should take depends on the project’s requirements and the infrastructure you’ve built. For example, if you have a multi-region cluster and want to distribute traffic according to the visitor location – consider configuring your load balancer to work with an IP hash algorithm. On the other hand, if all your cluster nodes are situated in the same data center – you’re looking to make the most of the available hardware and extract the best possible performance, so a dynamic algorithm is likely your best bet.

Choosing an algorithm isn’t something you just tick off a to-do list, though. In addition to forming an integral part of your entire server cluster, the load balancer can be an excellent source of analytics data.

You can see how the infrastructure performs under certain conditions and make adjustments to the algorithm of your choice. Modern load-balancing solutions can even automate some of this and identify potential bottlenecks before they actually slow you down.

There is yet another aspect that the load balancer can help with.

Load Balancing and Security

The load balancer is responsible for receiving and redirecting every request sent to your server cluster. It processes a lot of traffic and deals with various scenarios. If you properly log and audit all these events, you could end up with tons of useful information that will optimize the cluster’s performance and beef up its security.

For example, you can use tools and services that automatically analyze the incoming requests’ IP addresses, headers, and payloads and identify potential anomalies or abusers. This allows you to take precautions and keep your data safe. The only thing you need to remember is to ensure the logs themselves are adequately secured, as they could potentially hold a lot of sensitive information.

Another added benefit of a good load balancing solution is DDoS (Distributed Denial of Service) protection.

Out of the box, a VPS cluster is much more resilient to DDoS attacks than a single-server setup – you have multiple machines capable of handling much more traffic than a lone virtual server. If you’re dealing with a particularly large campaign, however, even your network of VPSs may be overwhelmed. Fortunately, with a properly configured load-balancing solution, the DDoS attack won’t be able to reach your infrastructure.

Modern load balancers can be configured to identify DDoS-related activity and automatically redirect unwanted traffic to a public cloud provider. Instead of flooding your cluster, all junk requests are sent to infrastructure owned by companies like Google and Amazon. They can easily absorb the traffic and have the power to analyze the source of the attack and take precautions to minimize future nefarious activity.

Meanwhile, your managed VPS cluster remains fully operational and completely isolated from the DDoS threat.

ScalaHosting Managed VPS Clusters

Managed VPS hosting has been at the center of ScalaHosting’s portfolio for over a decade now. Over the years, we’ve built a reputation as one of the leading providers of cloud virtual private servers, and we’ve attracted the attention of the owners of some high-profile projects.

Some of our clients’ apps and websites aren’t entirely suitable for a single-VPS setup, though. They need a high-availability service with excellent speed and a reliable failover mechanism ensuring no hardware faults can disrupt their operation.

This is exactly what our managed VPS cluster solutions provide. High-traffic websites and mission-critical applications can take advantage of VPS clusters built by industry experts with decades of experience.

Our solutions are tailored to the individual projects’ specific requirements, and the customization options are pretty much limitless. You can have all your servers in a single data center, working in an Active-Passive topology, or set up a multi-location Active-Active network.

In all cases, our system administrators will implement robust load balancing solutions designed to spread the load evenly and maintain perfect performance even during busy periods. To guarantee the reliability you’re after, your cluster never bets on a single load balancer. There’s always at least one backup, and in a multi-region cluster – the setup is replicated in every data center, eliminating any chance of downtime.

Because every project has individual needs, our experts are on hand to speak to you, find out more about what you’re trying to achieve, and draw up plans for the best solution for you. They will then build your cluster and, because the service is fully managed, configure it to provide the best possible hosting environment for your site.

Don’t hesitate to contact us if you have any more questions.

Conclusion

Load balancing is central to any multi-node hosting infrastructure. With it, you can get the performance and reliability benefits of using more than one server. However, building a good load balancing solution is trickier than you think.

Technology has evolved to the point where you have many different options and quite a few factors that can alter the direction you’re heading. Going through them carefully and carefully planning every step gives you the best chance of making the most out of your managed VPS cluster.

FAQ

Q: What is the most widely used load balancing algorithm?

A: Round-robin is the most popular load balancing algorithm, implemented in production server farms worldwide. It does an excellent job in most scenarios and is easy to understand and put into action.

Q: What is dynamic load balancing?

A: A dynamic load balancer is constantly fed information regarding the health and load of each server. Based on this, it identifies which node can fulfill pending requests more efficiently and reroutes traffic to it. As the load on each server changes, the load balancer adapts and forwards traffic to the different machines in order to extract the best possible performance.

Q: What is the difference between load balancing and load sharing?

A: Load sharing implies that two or more servers work together to serve a website or an application. One of them may be handling 90% of the traffic while the others process just 10%. A load balancer redirects requests in a way that ensures the efficient utilization of all the available hardware.