On August 16, 2013, Google suffered an unprecedented global outage. For about five minutes, all services offered by the company, including Google Search, Google Drive, YouTube, and Gmail, went offline and were inaccessible worldwide.

The result? At the time, analytics companies calculated that the incident had caused a 40% drop in global internet traffic.

So, what can we learn from this? Well, the incident shows that while uptime is vital for all websites, some outages are more costly than others.

That’s why, while your personal blog may be hosted on a single server, popular, mission-critical websites and applications need a more complex setup known as a high-availability system.

Today, we’ll take an in-depth look into what a high-availability cluster is and show you how it works in the context of managed virtual servers.

What Is a High-Availability System?

In a typical hosting setup, you have one physical or virtual server that hosts one or more websites. All the files and databases are stored on a single machine, which is responsible for processing all requests coming from the internet. If it goes down – your entire site goes down. In other words, there’s a single point of failure.

For some enterprises, the risks involved in this setup are too high.

Imagine, for example, a large ecommerce store with customers from across the world and hundreds of orders registered every second. Even a few minutes of downtime will result in hundreds of thousands of dollars in lost revenue and reputational damage that will be difficult to repair. If you run a mission-critical application that deals with things like online banking, traffic control, etc., the consequences of an outage could be even more severe.

Projects of this kind require a more reliable hosting infrastructure that doesn’t depend on a single server. At this point, we should probably clarify what we mean by “more reliable.”

Let’s assume you launched your site exactly 24 hours ago, and during this period, it went offline for 5 minutes because your host needed to reboot your server. A full day has 1,440 minutes, and 5 minutes is 0.35% of them. Hence, your application has 99.65% uptime.

This figure is unacceptable for a large money-making website or a mission-critical application. These projects aim for a benchmark known as “the five 9s,” or 99.999% uptime. In 24 hours, an acceptable outage for them would last no more than 0.0144 of a minute (or 864 milliseconds). The only way to achieve this is with a high-availability hosting infrastructure.

A high-availability (HA) system consists of multiple servers (or nodes) connected in a cluster and configured to host the same website or application. There are many different setups, but in all cases, if one of the nodes goes offline – others can take over and ensure your site is accessible to the world.

This is how an HA cluster eliminates the single point of failure. Sounds straightforward enough, but how do you actually design and build a high-availability infrastructure?

There are three main concepts you need to pay close attention to. Let’s discuss them in a bit more detail.

Redundancy

In broader computing terms, building redundancy into a system means setting up multiple components that do the same thing. In our case, the components are the servers (or nodes) that make up our cluster. Their job is to process requests, deliver files, and read/write data from your site’s database.

If one server is knocked offline, the others keep your site accessible. As long as at least one cluster node is still working, users will be able to visit it.

Monitoring

Monitoring is an essential part of the operation of a high-availability cluster. A system, often built into the web server or the load balancer, must be on hand to keep track of the health of every cluster node. Based on this information, requests can be rerouted to specific nodes to guarantee uninterrupted uptime and excellent performance even under heavy traffic.

Failover

The failover is the action of switching from a server that is going down to one functioning correctly. If your failover mechanisms are good enough, users won’t know you’re effectively redirecting them to a different node.

The concepts above should be the foundation of every high-availability cluster. Overall, there are many different approaches to this setup, and the one you choose strongly depends on the type of HA system you’re building.

Types of High-Availability Architecture

No two high-availability systems are the same. Providers use all kinds of different servers and virtual instances, and every cluster they build is tailored to the client’s specific requirements. The solutions tend to be pretty flexible.

A high-availability system is easy to scale horizontally by design. If your website demands outgrow your current setup capacity, you can easily add more servers to your cluster for more power and redundancy.

In light of all this, classifying HA systems according to size is a bit pointless. However, we can see several different approaches when it comes to other aspects of their operations.

Active-standby vs. active-active infrastructure

First, let’s examine how HA clusters handle requests and utilize the available servers.

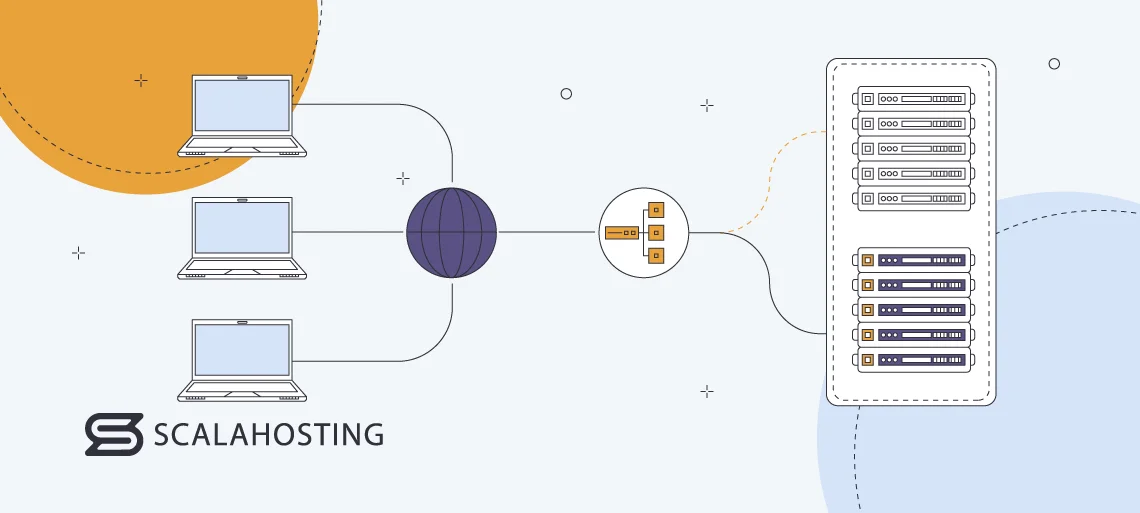

An active/standby high-availability setup

In this setup, a single primary server processes all incoming requests. The other nodes in your cluster are on standby – they’re turned on but don’t handle any traffic. A monitoring system keeps a close eye on the primary server’s health, and if it spots a problem – it immediately redirects the traffic to the second node, which starts handling the incoming requests.

If something happens to the second server – the traffic goes to the third one, and so on. Once the primary node is fixed, it’s brought back online and resumes processing the incoming requests.

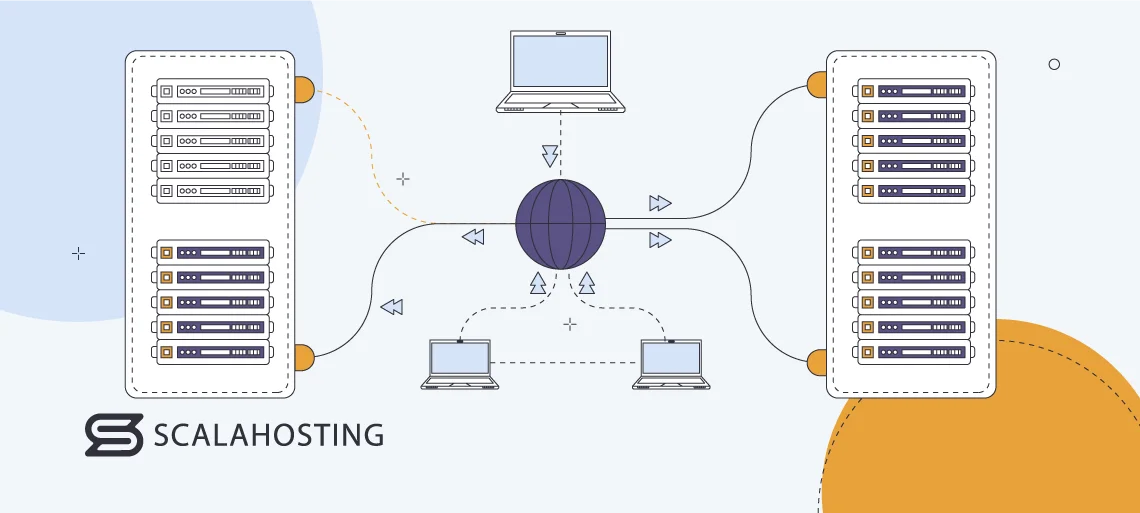

An active/active high-availability setup

In an active-active cluster, no single server handles all the traffic. Instead, a load balancer is situated between the internet and your application servers, and its job is to redirect incoming requests to different nodes in the cluster. The idea is to utilize server resources more efficiently by spreading the load among multiple machines. If one of the nodes in the cluster experiences issues, the requests destined for it are automatically sent to the other servers.

Pros & Cons

An active-active infrastructure improves both the availability and performance of your site. On the one hand, this provides the redundancy that is essential to all HA clusters, and on the other – your website has constant access to a large volume of hardware resources, meaning you can expect faster loading speeds.

In terms of complexity, an active-standby infrastructure is more straightforward. Fewer components are involved in the operation, and there is less work configuring everything to work in unison.

The simpler setup lowers the price, but while an active-standby architecture may be cheaper in absolute terms, you’re still paying for resources that remain unused most of the time. From that point of view, you’re more likely to get your money’s worth with an active-active cluster.

Single site vs. Multisite Infrastructure

Building your high-availability cluster with virtual machines makes a lot of sense. They’re cheaper to run and much more flexible when it comes to hardware configuration. However, they still need to be deployed on physical servers, which, in turn, are located in data centers. You have two options.

Single site cluster

With a single site setup, all your nodes are situated in the same data center. Virtual instances may be deployed on different physical machines for better redundancy, but the data is served from the same location.

Multi Site cluster

It is possible to run your high-availability cluster from multiple data centers. You can deploy your nodes wherever you want. One of them may be situated in Europe, you can have another in the US, and the third one may be in the Far East. The only limiting factor would be the locations your host offers.

Pros & cons

If your online project attracts a global audience, the benefits of a multisite architecture should be obvious. In an active-active setup, you can configure your cluster to analyze individual requests and serve them from the location closest to the visitor (just like a content delivery network).

The downside of a multi site cluster is the added complexity. Maintaining the cluster is also more challenging, and because incoming requests will be rerouted to nodes in different countries – the additional network traffic could offset the performance benefits.

However, in addition to the CDN-type behavior and the faster loading speeds, there is one more scenario where a multisite high-availability architecture offers valuable advantages. If your nodes are deployed in a single location, and there’s a catastrophic failure or a natural disaster at that location – your entire cluster will go down with it.

It’s worth pointing out that data center operators do a lot to ensure this never happens. They run state-of-the-art facilities and follow dozens of security protocols covering all kinds of scenarios. Nevertheless, disasters do happen sometimes, and the consequences are pretty horrific.

It should be abundantly clear that even the smallest high-availability cluster is a complex system with multiple components critical to its operation. Let’s examine them more closely.

Load Balancing and High Availability

You can think of the load balancer as a dispatcher that receives requests from the internet and reroutes them to different nodes in the cluster. Of course, this is a very simplified overview.

Remember the key concepts that must be applied to every high-availability system – redundancy, monitoring, and failover? You can ensure redundancy thanks to the multiple application servers connected to the cluster. For monitoring and failover – you turn to the load balancer.

Through it, your high-availability infrastructure knows how well each of your servers is doing and can redirect traffic accordingly.

We’ll learn how it does that in a minute, but first, let’s see the different varieties of load balancers.

Types of load balancers

There are two main types of load balancers:

Hardware load balancers

A hardware load balancer is a physical device connected to your high-availability cluster. It may be situated in the same data centers as your application servers but could also be in a different location. A hardware load balancer has a specialized operating system and tools that help sysadmins configure the mechanisms for rerouting requests to individual nodes.

Software load balancers

In the past, software load balancers used to be simply the software taken from the physical load balancers and adapted to work in a standardized environment. Nowadays, things are different.

A software load balancer can run on a separate physical or virtual server hosted on-premises, in a data center, or on the public cloud. Often, it’s a component of the default software stack installed on your hosting account and could even be integrated into the web server.

Pros & cons

A hardware load balancer is traditionally considered the more advanced option because you have an entire hardware device created with a single purpose in mind. However, in recent years, software load-balancing solutions have come leaps and bounds, and thanks to the vast range of features, they are now considered the better option for most projects. Because they are often integrated into the software stack, the software solutions are usually cheaper and easier to deploy, maintain, and scale.

Load balancing algorithms

When a request comes in, the load balancer has to decide which cluster node will process it. Crucially, the traffic must be rerouted to keep the overall load at reasonable levels while maintaining excellent performance.

To ensure all this, system administrators implement load balancing algorithms – rules that load balancers follow when assigning incoming requests to cluster nodes.

There are several well-known load-balancing algorithms that can be grouped into two categories:

Static load balancing algorithms

A static load balancing algorithm consists of set-in-stone rules that are applied regardless of things like the current state of each server, traffic volumes, etc.

Here are a few examples:

- Round-robin – The requests are assigned sequentially (i.e., the first request goes to server 1, the second to server 2, the third to server 3, etc.).

- Weighted Round-robin – This variation of the round-robin algorithm allows administrators to reroute more traffic to cluster nodes that can handle a greater number of requests at once.

- IP hash – The load balancer uses the source IP address to calculate a unique key and determine which server should receive this particular request. It’s an excellent solution to ensure traffic from a specific IP range hits an exact server.

Dynamic load-balancing algorithms

A dynamic load-balancing algorithm adds rules that apply to the current state of your high-availability cluster. If your load balancer uses such an algorithm, it will consider one or more factors before deciding which server is best equipped to process every single one of the incoming requests.

Here are a few examples of a dynamic load-balancing algorithm:

- Least connection – The incoming request is automatically sent to the server with the fewest open connections.

- Weighted least connection – Some connections exert more load on the server than others. By modifying the least connections algorithm, admins can factor in not only the number of requests but also their type.

- Resource-based – Each server in the cluster has an agent installed on it that monitors the amount of available memory and processing power. This agent constantly communicates with the load balancer, so the balancer knows which servers are busy and which can take more requests.

Typically, administrators implement a combination of two or more static and dynamic algorithms to guarantee excellent performance and consistent speeds while maintaining the server load at manageable levels.

Load balancing and security

Every component in your high-availability system must be configured with security in mind, and the load balancer is no exception. Over the years, admins have tried to use load balancers for numerous security-related tasks. Some of the practices made sense at the time of their introduction but are now considered obsolete.

For example, in the past, load balancers were often used for something known as SSL termination (or SSL offloading). In basic terms, administrators used the balancer as a decryption device that unscrambled the SSL-encrypted requests before passing them on to the application server. This meant less load on the cluster nodes and quicker response times.

However, SSL termination is now considered risky because it effectively means that the data flow between the load balancer and application servers is not protected. The risk is particularly severe for multisite clusters, where information needs to travel between data centers.

Using the load balancer to decrypt data is not a very good idea. However, using it to protect your project from DDoS attacks is.

Modern load balancing solutions can automatically detect junk traffic and offload it to a cloud provider like Amazon or Google. The cloud is the most efficient way of absorbing DDoS campaigns nowadays, so if you configure your load balancer to take advantage of it – you stand a pretty good chance of going through severe web attacks unharmed.

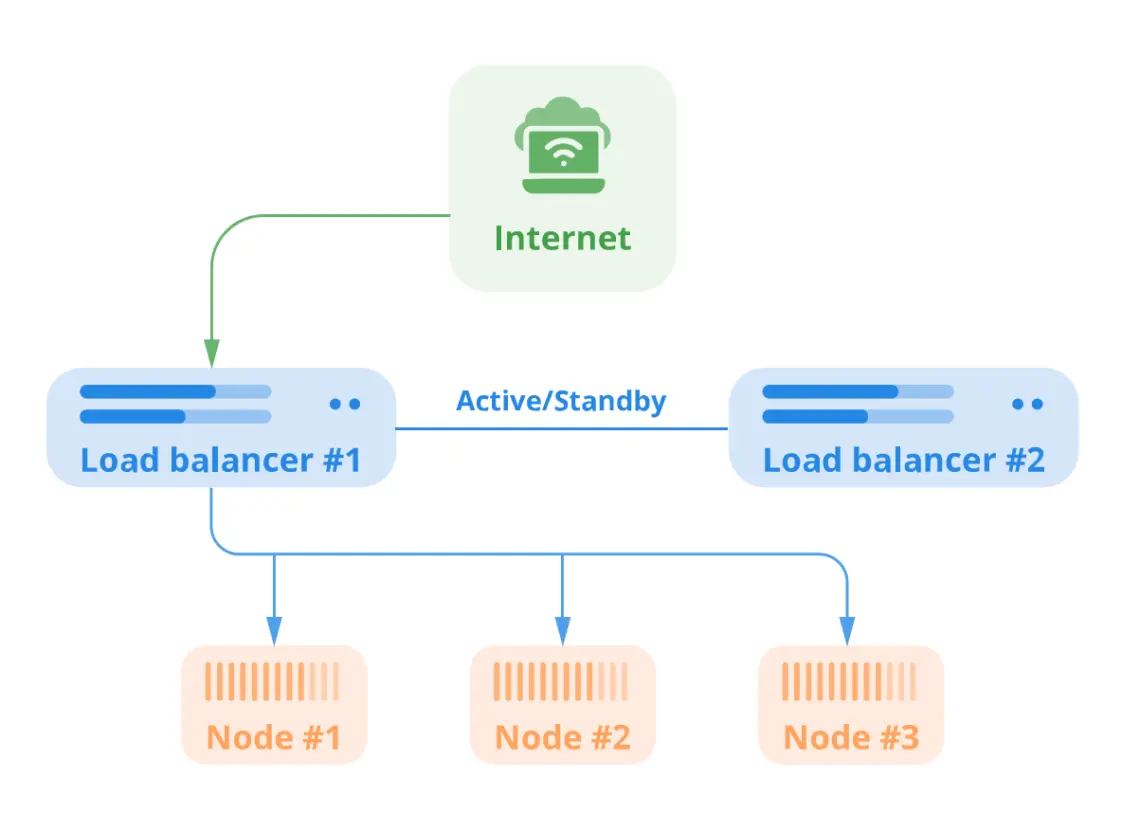

Redundancy in load balancing

So far, we’ve referred to the load balancer in the singular. However, a cluster with only one load balancer isn’t a true high-availability solution.

Without your load balancing solution, the entire cluster goes down, so if it consists of a single device or software application, you have a single point of failure – the very problem you set off to solve.

That’s why a well-designed high-availability setup has multiple load balancers working in either Active-Active or Active-Standby configuration. In a multisite setup, they are bound to be situated in different locations for additional redundancy. This guarantees that even if one of the data centers you use goes down – there is a load balancer that can keep the cluster online.

Virtual IPs and Their Failover Function

So far, we’ve explored the principles that high-availability clusters employ to direct requests to available nodes and keep your website running in the event of hardware failure.

It’s now time to see the mechanism in action.

Your cluster of servers is, in effect, a computer network not too dissimilar from the one you have at home. In it, your computer, mobile phone, smart TV, and other IoT devices are connected to the router, which acts as a gateway for traffic coming to and from the World Wide Web. In your high-availability cluster, you have nodes instead of devices and a load balancer instead of a router.

The individual machines in any computer network, regardless of the size, must be easily identifiable. In the case of your HA cluster, for example, the load balancer must be able to identify the individual nodes, check the load, and redirect the traffic to the most suitable destination.

The IP address is the most commonly used identifier when dealing with computer networks of any kind. In your HA cluster, individual nodes are assigned virtual IP addresses (or VIPAs). The IPs are virtual because they’re not tethered to a physical network interface.

The load balancer has an up-to-date list of all the VIPAs in the cluster and the MAC addresses they correspond to. It knows where each request goes at any given time. If a VIPA needs to be reassigned – the list must be updated.

The load balancer exchanges information with all the virtual IPs in the cluster. If a VIPA stops responding, there’s an apparent problem with one of the servers, and traffic sent to it is immediately diverted to a healthy node. This is what the all-important failover process looks like.

However, remember that the information flow must work both ways. When nodes process a request and send the required information back, it must pass through the load balancer before being sent to the user. Like the nodes, the load balancer has its own IP. However, its address is referred to as “floating.”

A floating IP is similar to a virtual one in that it’s not connected to a hardware network interface – it’s completely invisible to the outside world. However, unlike a virtual IP, it can be reassigned to a different device more easily. So, if the primary load balancer goes offline, the floating IP is automatically transferred to a backup, and the responses from the application servers are delivered without disruption.

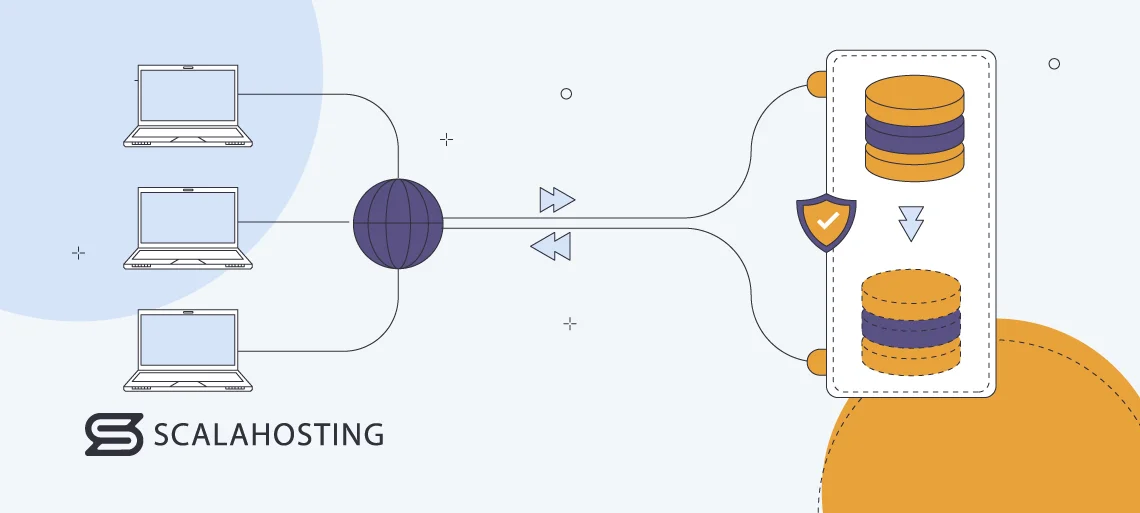

High Availability and Data Replication

Redundancy, combined with an advanced monitoring system and a reliable failover switch, can practically eliminate the risk of users getting a blank screen or an error message when they try to access your site. However, you’re not completely out of the woods just yet.

In a traditional setup, you often have one set of files and one database hosted on a single server. With a high-availability infrastructure, you must ensure the files and the database are available on all cluster nodes. This is a complex task in itself, made even trickier by the level of interaction modern websites tend to offer.

Nowadays, something as little as a click of a button may generate a new data entry that has to be stored for future use. On a busy website, this happens hundreds or thousands of times a second, and in a high-availability environment – the challenge is to ensure the changes are visible to millions of people at once.

In the past, there were two ways to do this.

- A shared disk architecture – To build a shared disk infrastructure, you set up an external storage device and configure your cluster nodes to work with it. All the data generated by users, regardless of which server they’re connected to, is saved on the same disk and is available for use by the other machines.

- A shared-nothing architecture – In a shared-nothing architecture, nodes write information to their internal disks, and an automatic data replication mechanism ensures it is copied on all servers in the network.

It’s essential to reflect all changes simultaneously. The process is known as synchronous data replication (as opposed to asynchronous, where updates are made periodically), and it’s the only way to guarantee high availability with zero data loss.

Both setups have their pros and cons. For example, a shared disk architecture offloads one of the most critical jobs to an outside device, which reduces the load on the application servers. However, it also adds complexity to the network, making it more expensive.

Using the application servers’ internal disks sounds like a straightforward solution, but implementing a synchronous data replication mechanism is anything but simple, and ensuring redundancy with this sort of setup is tricky.

Thankfully, the advancement in cloud technology has enabled technology companies to innovate and create a solution that seems far superior to the traditional approaches.

- Distributed storage – A distributed storage architecture involves storing data on multiple physical or virtual machines. In addition to your application servers, you have a cluster of nodes responsible for data storage only.

A distributed storage solution breaks down the data into chunks and stores different portions on different storage nodes. Every application server in the high-availability cluster has direct access to the information and can find whatever is required at any time.

The distributed storage setup has several key benefits when compared to the traditional solutions:

- Redundancy – Distributed solutions can store multiple copies of the same data on different nodes. The data is easily recoverable even in case one of the servers in the cluster goes offline.

- Cost – The price of cloud resources has dropped significantly in recent years, and because distributed storage lets you use them to their full potential – you can safely say that this is the most cost-effective solution out there.

- Performance – A distributed storage setup can deliver data much quicker than traditional solutions, especially using cloud infrastructure deployed in multiple geographical regions.

- Scalability – Scaling a distributed infrastructure according to your needs is both easy and cheap.

Thanks to these benefits, global cloud hosting services like AWS are powered by a robust distributed storage infrastructure. Not surprisingly, the same goes for our high-availability solutions.

ScalaHosting and High-Availability Clusters

Uptime is essential for ScalaHosting’s business. If our company website goes down even for a few short minutes, we might be facing lost sales, but the damage to our reputation is much worse. After all, how can you trust a hosting provider with your project when you see that it’s struggling to keep its own site up at all times?

That’s why our website is hosted on an advanced high-availability cluster situated in multiple data centers worldwide. All nodes work simultaneously, and data is delivered to the user from the closest location. When one of our servers goes down, the rest are on hand to take up the slack. The result?

We’ve had no notable outages in ages, and tests show that when it comes to important performance metrics like Time To First Bite (TTFB), we’re on par with and sometimes even faster than Google.com.

We know that some of our clients need similar performance and uptime, and to help them, we offer advanced high-availability cluster solutions that cater to their requirements.

You can choose from three main architectures:

Single DC cluster

Multiple VPS servers are deployed in the same data center and connected in a cluster. They’re all responsible for processing users’ requests. Above them, there are two load balancers in an Active-Standby configuration. If one of the load balancers fails, the other is on hand to take up the slack.

The combined power of several virtual servers guarantees blistering performance for your site, and the load balancers ensure the virtual machines aren’t overloaded even during traffic spikes. Simultaneously, the built-in multi-level redundancy is designed to eliminate the chances of an outage.

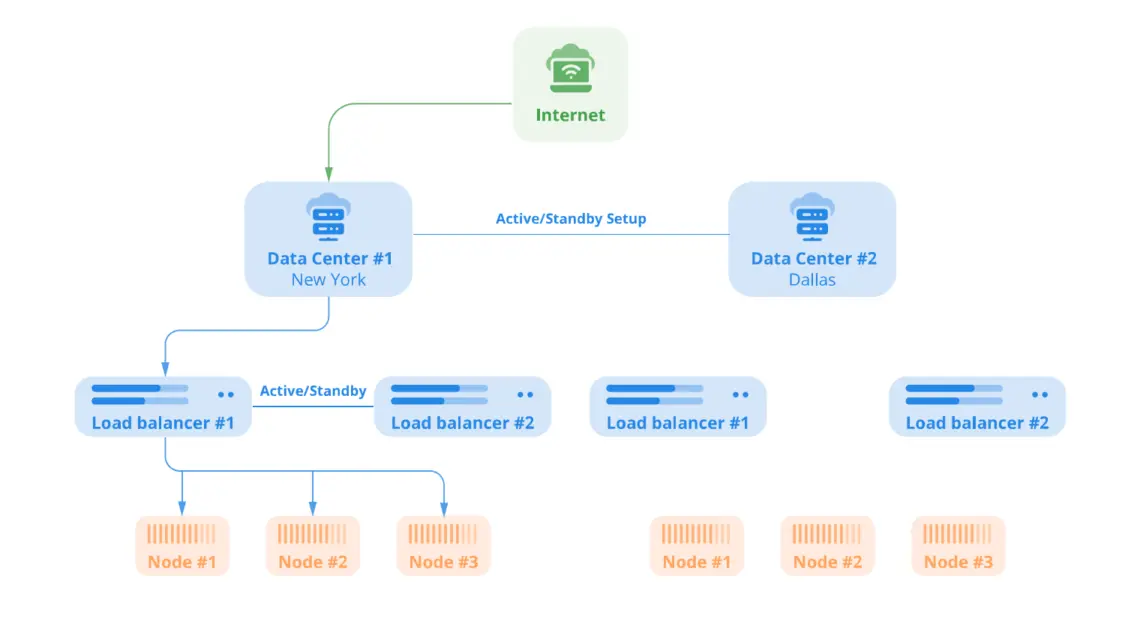

Multi Data Center cluster

With a multi DC cluster, the configuration in the single DC setup is replicated in a second data center. Most of the time, the VPSs in the second data center don’t process any requests – they remain on standby and only kick in if the first facility goes offline.

In other words, the multi DC cluster employs an Active-Standby architecture to guarantee your site will remain operational even if one of the data centers stops responding. Furthermore, the backup data center can help you keep your site online during regular maintenance. This setup is all about redundancy and uptime boost.

Multi region cluster

The third and most intricate architecture involves multiple servers deployed not just in different data centers but in different countries. The cluster follows an Active-Active model, meaning all the virtual servers process requests simultaneously.

What’s more, the connection by every single site visitor is diverted to the nearest location. The data has less distance to cover, so it’s delivered to the user more quickly.

If you have a busy website with visitors from all over the world and need excellent loading speeds at all times – this is the high-availability setup for you.

Of course, the exact cluster specifications must be tailored to your project’s requirements. There are no off-the-shelf plans you can purchase and deploy in a few seconds. Instead, our technical experts are on hand to carefully examine your website and give you a detailed proposal of the sort of solution you’ll need.

You don’t have to worry about setting anything up yourself. Our VPS clusters are fully managed, meaning the Scala technicians are in charge of deploying the infrastructure and ensuring it’s properly configured and operational at all times.

You remain focused on building your project while having the perfect platform to do it with. ScalaHosting’s VPS clusters are powered by SPanel – our proprietary server management platform. We have been developing it for years, working hard to make it the best control panel of its kind currently on the market.

Throughout this time, we have used feedback from our customers to determine which features to implement, and we’ll continue to do it because we believe the people who use it daily know what’s best for its future development.

If you want to learn more about our high-availability solutions, do not hesitate to contact our experts, who will answer all your questions.

Conclusion

A high-availability cluster can be a complex system. You have several physical or virtual machines working in unison to serve your website, as well as multiple utilities that ensure coherent network performance and reliability even under heavy load.

Multi cluster solutions are quite cost-effective for high-traffic businesses and can produce some truly remarkable results. You just need to ensure your setup is built and configured in line with your project requirements.

FAQ

Q: What is high availability?

A: The purpose of a high-availability system is to minimize downtime as much as possible using an infrastructure with built-in redundancy and a failover mechanism. The setup involves multiple servers working together to process visitor requests. If one of the nodes goes down, the others take over and keep the site online. The goal of every high-availability system is to achieve 99.999% uptime.

Q: What three things do I need to achieve high availability?

A: Redundancy ensures that you have backup resources that can be employed in case one of your primary servers goes offline. A monitoring system is critical for detecting any hardware failures before they disrupt your site’s performance. A reliable failover switch should reroute the requests from the failed server to the backup nodes.

Q: What’s the difference between a high-availability system and a backup?

A: A high-availability system provides the infrastructure to ensure your site stays online even in the case of hardware failure. By contrast, a backup is used to restore the site after it goes down. Even if your project runs on a high-availability network, you must still ensure you have a working backup of your files and databases, just in case.